Hi Raja,

just found out a really surprising fact (of interest).

My measurement consists of two phases, between this phases data recording stops for a short time. The slave system sends a signal to mark the last block of data of a phase. After receiving this last block I do a flush of the systems file buffers for safety reasons. If there is a crash (mainly during development) I may get usful infos.

I also write a marker in the file and I see this marker in the file afterwards, so I am quite sure, that this flush is only done once at the end of a phase.

I found out, that after this little break the write cycles to the file ( I left open all the time) need double the time as before.

During code review I only found one difference: I do a flush.

Now I comment out this flush instruction and voila, the write cycles during the second phase needing the same time than during the first Phase now. Ohhh.

Here the code snippet:

[upload|Dcd1DlvvDqL9Duj9JxYfAtNBf3Q=]

You see the both methods used and the flush instruction.

Mesurement results using stream:

[upload|2KplDCvoseIDjbh58qeBAhmmAAA=]

The out.flush() instruction is executed and time for file io doubles, see markers.

[upload|8q2M+zQmCpRR8PG3OpPRfOlm7JY=]

Without the flush instruction, it looks fine.

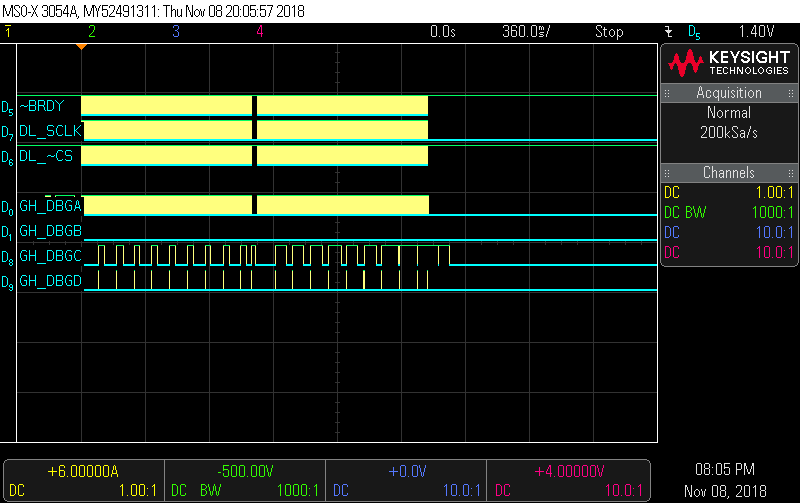

Measurment results using nativ file IO:

[upload|RPzQM2Dpqd2e/JZZfrtX/SM2ItQ=]

After fflush(…), we need double the time to write to the file.

[upload|6DrwWQ+I367MoEd0SOo10k0UOjI=]

Without the fflush(…) it looks fine.

It also turns out, that the runtime difference between using native file io and streams isn’t that dramatic but it is there.

As my software do the same things during all this measurements and only the flushes made the difference, there must be something going on in the implementation of the system itself, the block device driver or the USB driver, or …

I can’t use the micro SD to get closer to the problem, because Toradex told me that there is a knowen bug and it needs time to fix that.

With best regards

Gerhard