Webinar:

AI2GO: A New, Self-Serve Platform for Deploying AI at the Edge

Date: July 25, 2019

Traditional deep learning models require large amounts of computing power, so deploying at the edge has required either expensive hardware or a constant connection to the cloud. Xnor’s new AI2GO platform enables enterprises and individual developers to easily deploy hyper-efficient deep learning models onto edge devices. AI2GO hosts hundreds of Xnor's pre-trained AI models that are tuned for specific hardware targets, use cases, and performance characteristics to fit different needs. There are a variety of models for computer vision tasks like object and person detection, image segmentation, and action classification, that run on a selection of low power hardware such as the Toradex Apalis System on Module with the NXP® i.MX 6 and i.MX 8 SoCs.

As an example, an engineer at a building automation company could download an object detection model tuned for a commercial office use case that runs on an Apalis SoM with customized speed and memory. Engineers and product leads from a variety of industries such as commercial security, retail analytics, smart home, IoT, automotive, and consumer devices have already been using AI2GO to evaluate models for their use cases and to see how they might fit into their product offerings.

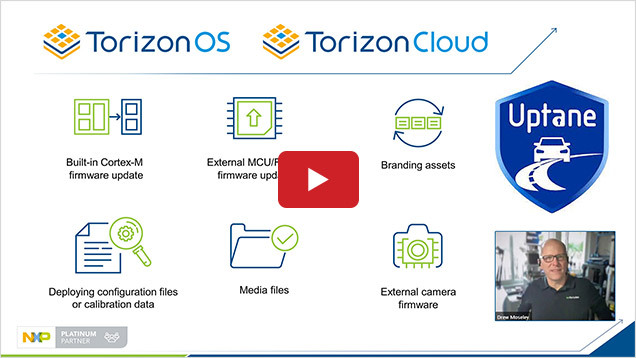

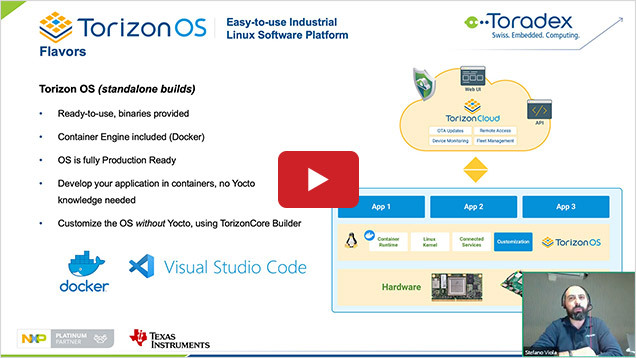

Xnor makes this process as simple as possible, so getting an AI2GO model up and running can take less than 30 minutes. AI2GO delivers models via modules called Xnor Bundles (XBs). An XB contains an AI model and an inference engine, allowing the model to run on-device with just a few lines of implementation code. It’s also integrated with Toradex’s new easy-to-use Industrial Linux Platform - Torizon.

Xnor’s AI2GO is changing how we think about accessibility to this kind of technology and where the industry could be headed. Watch this exciting webinar and see how you can try out AI2GO for yourself.