Blog:

Building a Video Surveillance System with Torizon

Toradex recently rolled out the first plank in the Torizon Platform; over-the-air updates (OTA). Let's have some fun with this. In this blog post, we will develop a Video Surveillance System running on a Toradex System on Module, using open source components. For purposes of this post, we are using the Apalis iMX8 module but any module that can run the TorizonCore operating system can be used; if your module does not have onboard Wi-Fi, you may need to use ethernet or a USB adapter. You can find some options in our Wi-Fi Database.

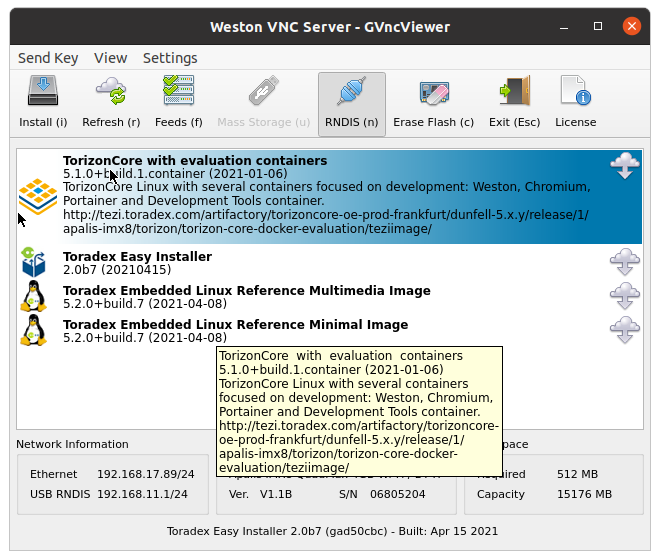

To get started, we will follow the basic hardware setup instructions from our Quick Start Guide. Follow the prompts on that site to select the SoM, Carrier Board, Target Device OS, and Development PC OS. Follow the first two green boxes for "Unboxing and Setup Cables" and "Installing the Operating System". For now, let's use the current released version of TorizonCore, which at the time of this writing is 5.1.0+build.1.container (2021-01-06).

After installing, reboot your board. Don't forget to disable recovery mode if you used that to run the Easy Installer. You will need to log in to the system. Follow the steps here from our QuickStart if you are not familiar with UART console and SSH connections. On your first login with username torizon and password torizon, TorizonCore will prompt you to change the password. Now, let's enable the Wi-Fi and connect to your access point. Listing 1 shows the steps needed for most Wi-Fi setups.

apalis-imx8-06805204:~$ sudo -i

Password:

root@apalis-imx8-06805204:~# rfkill unblock all

root@apalis-imx8-06805204:~# nmcli radio wifi on

root@apalis-imx8-06805204:~# nmcli dev wifi list

IN-USE BSSID SSID MODE CHAN RATE SIGNAL BARS SECURITY

00:11:22:33:44:55 Lab Infra 36 405 Mbit/s 89 **** WPA2

11:22:33:44:55:66 Public Infra 1 195 Mbit/s 12 * WPA2

root@apalis-imx8-06805204:~# nmcli --ask dev wifi connect Lab

Password: ********************************

Device 'mlan0' successfully activated with 'xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx'.

root@apalis-imx8-06805204:~# ifconfig mlan0

mlan0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 metric 1

inet 192.168.17.187 netmask 255.255.255.0 broadcast 192.168.17.255

inet6 fe80::9d90:953a:cba4:63e0 prefixlen 64 scopeid 0x20

ether d8:c0:a6:cf:63:29 txqueuelen 1000 (Ethernet)

RX packets 12 bytes 2217 (2.1 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 31 bytes 4602 (4.4 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

- Easy to use

- Able to monitor multiple cameras

- Has mobile apps for iOS and Android

- Simply to run in a container

In addition to the basic cabling we did earlier, we need to connect a USB webcam to the board. I used a Genius 120-degree Ultra Wide Angle Full HD Conference Webcam but any webcam recognized by the kernel will do.

These points make this system a good fit for running in Torizon. The motionEye project has good instructions on running it within Docker. Following these instructions, we can do a test run directly from the Apalis device's shell prompt. Listing 2 shows the commands. Note that the Docker hub does not contain an aarch64 version of this container image but we can use the armhf version just fine. This means that the code we are running is not fully optimized for our hardware, likely will not properly use hardware acceleration, and may run poorly. This can be remedied by building a containerized version for /aarch64/ with proper acceleration enabled but that is out of scope for this post.

apalis-imx8-06805204:~$ docker run --name="motioneye" \ > -p 8765:8765 \ > --hostname="motioneye" \ > -v /etc/localtime:/etc/localtime:ro \ > -v /etc/motioneye:/etc/motioneye \ > -v /var/lib/motioneye:/var/lib/motioneye \ > --restart="always" \ > --detach=true \ > ccrisan/motioneye:master-armhf Unable to find image 'ccrisan/motioneye:master-armhf' locally master-armhf: Pulling from ccrisan/motioneye eaaa43e22ddc: Pull complete 38b5f9df17bb: Pull complete 5a36f2cd0f41: Pull complete 957aa23b13ac: Pull complete 1b23938bf6aa: Pull complete Digest: sha256:1d7abea3e92e8cc3de1072a0c102a9d91ef2aea2a285d7863b1a5dadd61a81e1 Status: Downloaded newer image for ccrisan/motioneye:master-armhf 0cea6b7f833acd7038c61eeb76965fcac16782af716f33ebd7f6ed424532aa40

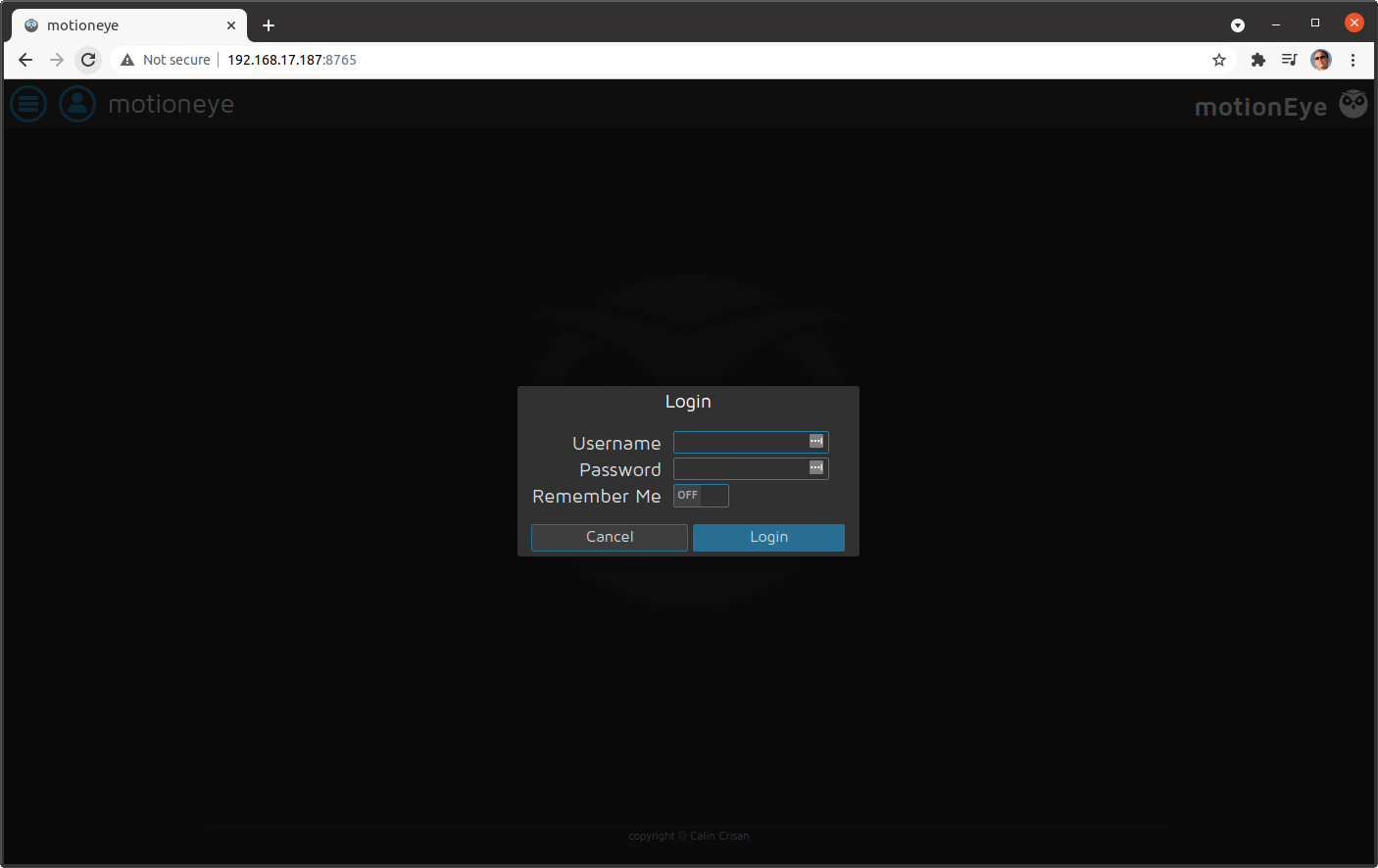

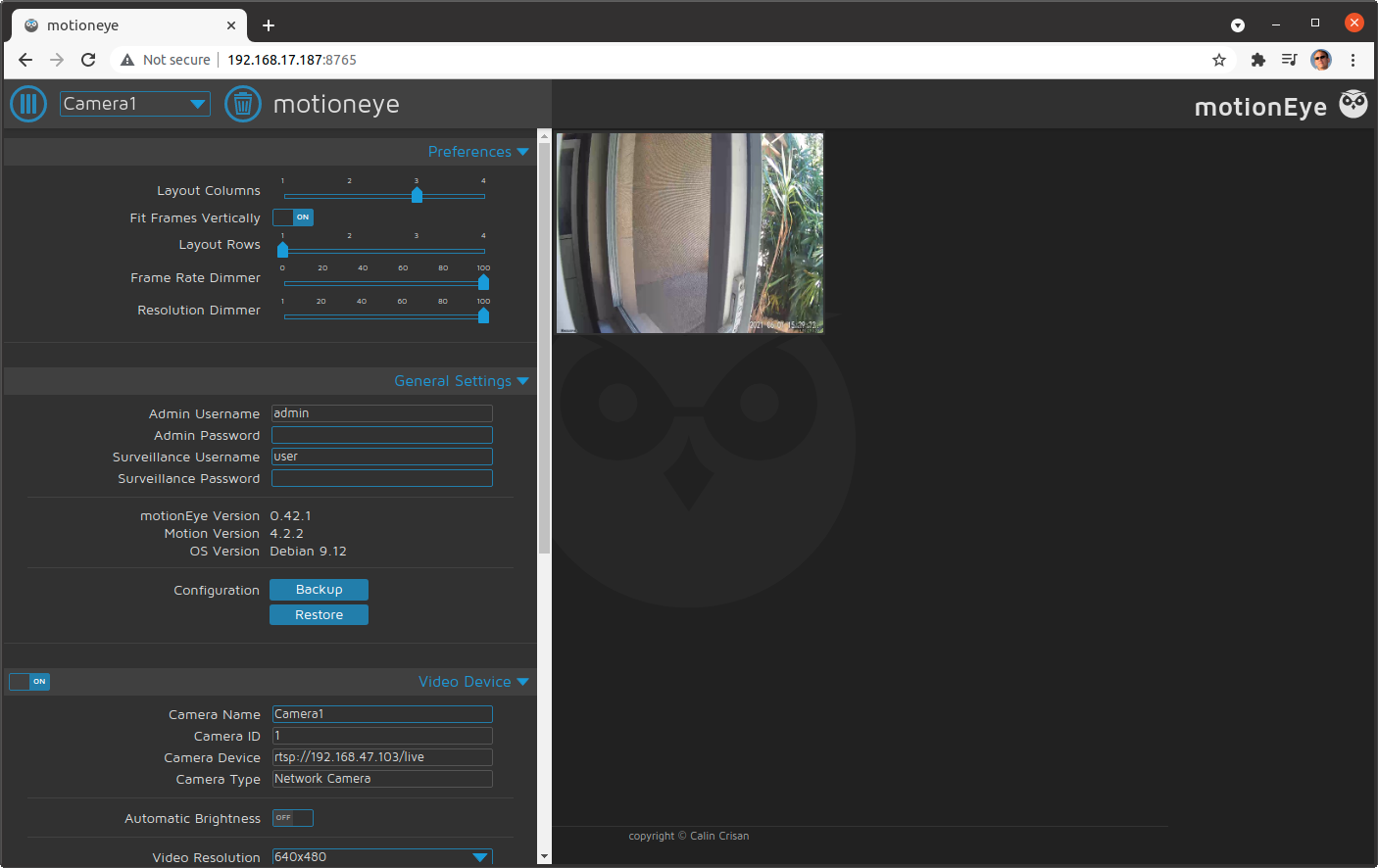

We can now point our web browser at the IP address of our board on port 8765 to see the login prompt for motionEye as shown in Figure 2.

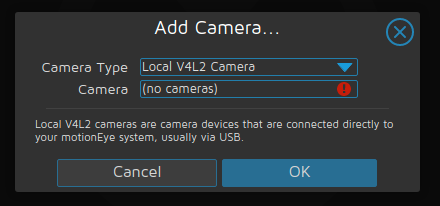

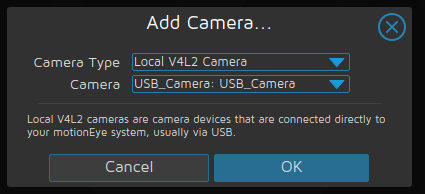

We can log in with username admin and no password (you should definitely change that). Since we have yet added any cameras to motionEye, will display a prompt for us to add one. However, when we click on the prompt, we see that there are no V4L2 cameras available. This is an issue with the way we launched the docker container and we will fix it later.

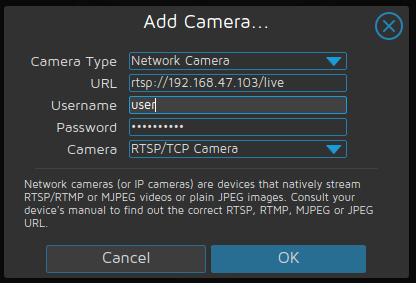

For now, let's continue testing the system by adding our network-attached camera. I have a Wyze Cam Pan with their RTSP-enabled firmware installed. This allows for a simple configuration within motionEye. As you can see in Figure 4, you provide the RTSP-based URL, username, and password, and click OK and your camera will be added. In a few seconds, your web interface will be updated to show the new camera contents.

At this point, we have proven the basic functionality of a video surveillance system. We have done all these steps manually in the deployed system which is fine for development and troubleshooting but does not scale well or provide a reproducible build setup for product development purposes. We will address these shortcomings shortly.

First, let's use some best practices with Docker.

If we take a closer look at the docker invocation, you can see that we are using 3 mount points; /etc/localtime, /etc/motioneye and /var/lib/motioneye. The /etc/localtime mount is passed into the docker container as read-only, so we are not worried about the container changing that. The other two mounts will work when specified with the given pathnames but for better compatibility with the Torizon OTA system, we will change them to proper docker volumes. The OTA system is specifically designed so that updates to the base OS are independent of updates to the container and using docker volumes ensures that all data used by the containers persists across updates.

We also wanted to be able to use a local USB camera as part of our surveillance system. We will need to make the video devices available inside the container for them to be used by motionEye. First, determine which nodes exist without the USB camera connected:

apalis-imx8-06805204:~$ ls /dev/video* /dev/video0 /dev/video1 /dev/video12 /dev/video13

Now, connect the camera and determine which nodes have been added:

apalis-imx8-06805204:~$ ls /dev/video* /dev/video0 /dev/video1 /dev/video12 /dev/video13 /dev/video2 /dev/video3

In this case, it is /dev/video2 and /dev/video3 (yours may be different). To run the motionEye container with data volumes, and access the local V4L2 camera, run the commands shown in Listing 5 below.

apalis-imx8-06805204:~$ docker stop motioneye && docker rm motioneye motioneye motioneye apalis-imx8-06805204:~$ docker volume create motioneye-etc && docker volume create motioneye-varlib motioneye-etc motioneye-varlib apalis-imx8-06805204:~$ docker run --name="motioneye" \ > -p 8765:8765 \ > --hostname="motioneye" \ > -v /etc/localtime:/etc/localtime:ro \ > -v motioneye-etc:/etc/motioneye \ > -v motioneye-varlib:/var/lib/motioneye \ > --device /dev/video2 \ > --device /dev/video3 \ > --restart="always" \ > --detach=true \ > ccrisan/motioneye:master-armhf 910f623ee22b7f19569c3c3202ca39f61ae41681a7e0c6864f1fec5a70fcabda

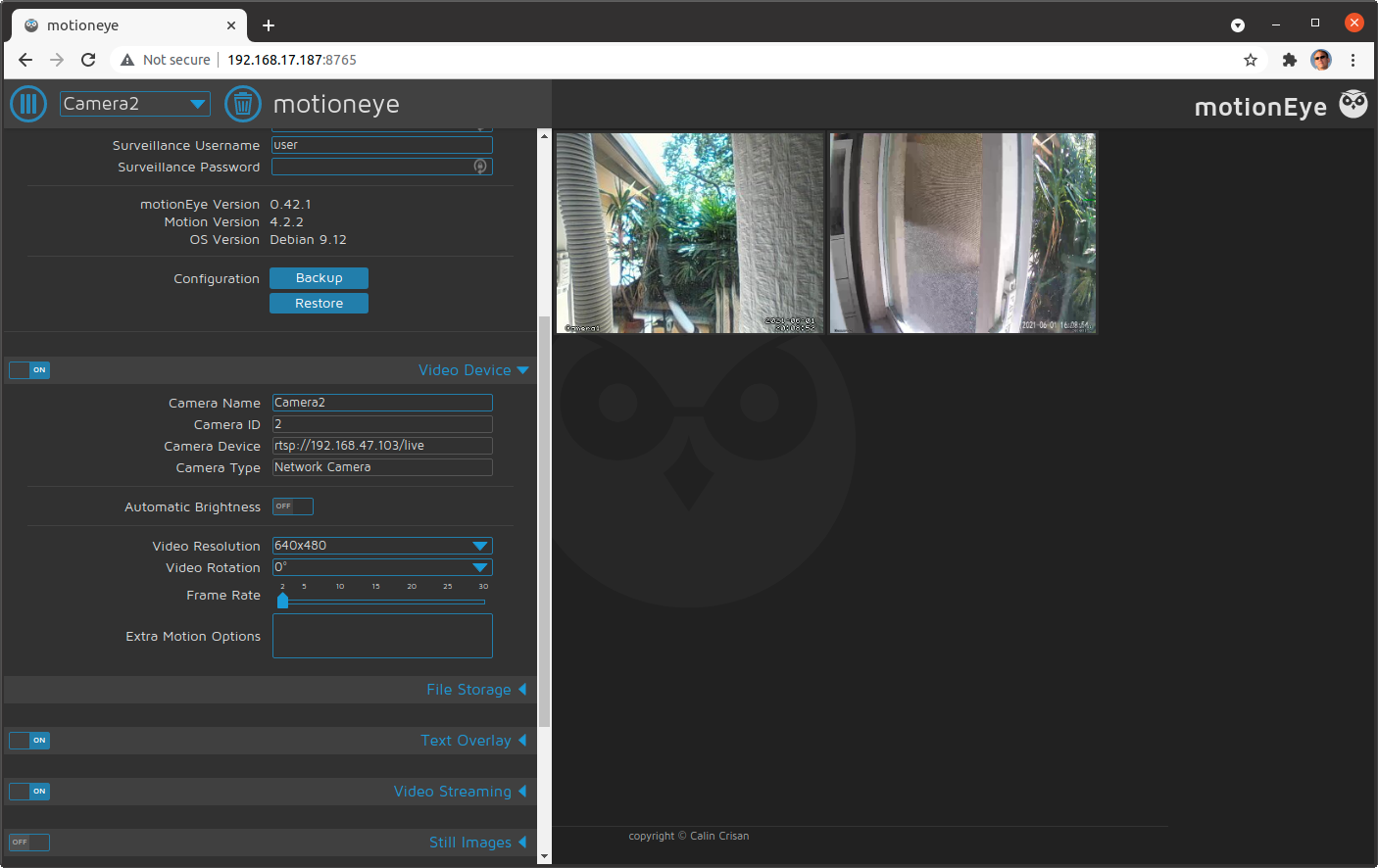

At this point, we will need to reconfigure the RTSP camera from earlier since are using new volumes. We can also now configure the USB webcam using the camera type "Local V4L2 Camera".

Figure 7 shows the web interface when both cameras are enabled.

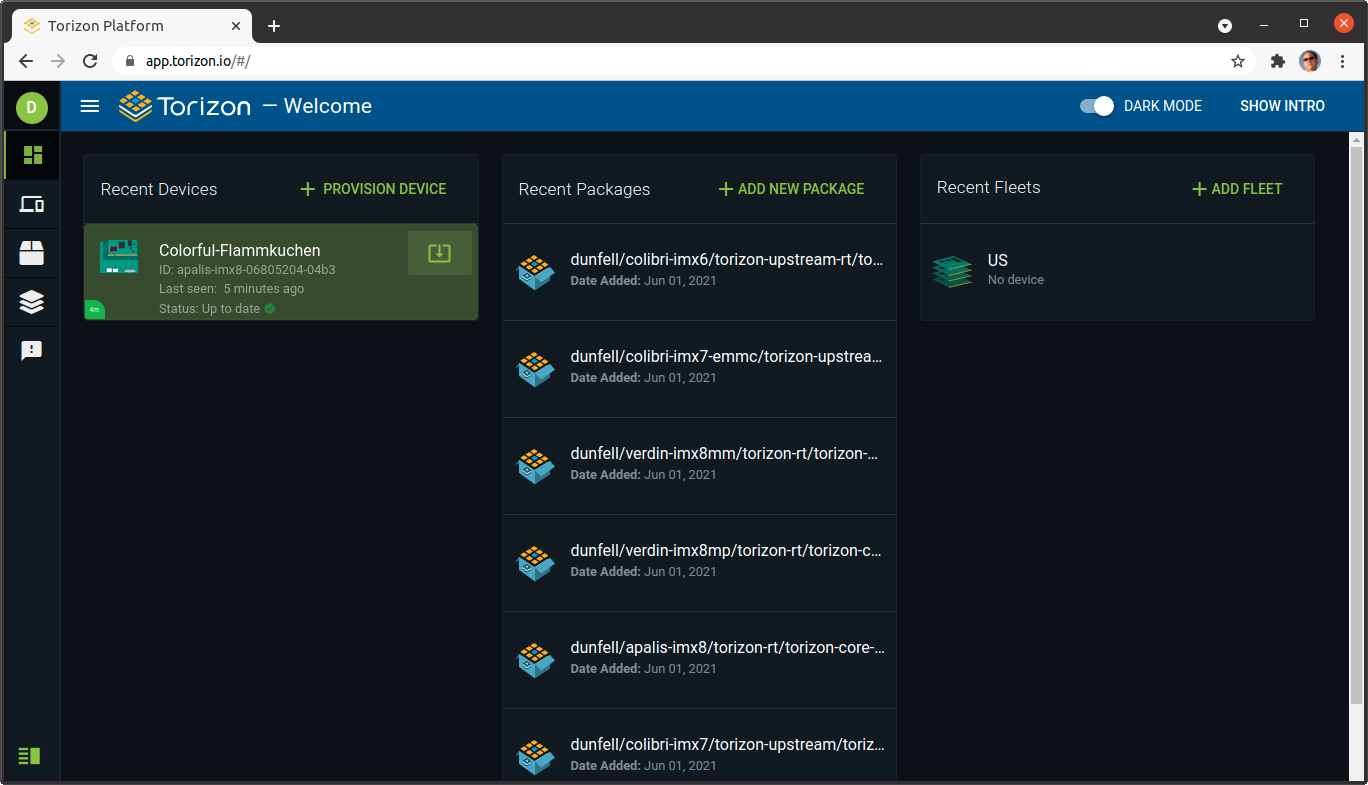

The first step in enabling our system for OTA updates is to add it to your device fleet in the Torizon platform dashboard. Once you have logged in, there is a link titled + PROVISION DEVICE. Clicking that link will bring up a dialog with a command you run on the device. Copy this command and paste it into a shell prompt on your device, and the aktualizr service will launch and connect to the server. The device will then appear in your device list with an auto-generated name.

Let's go ahead and remove the existing containers so that we can fully test the OTA delivery. Enter the commands from the following listing to cleanup.

apalis-imx8-06805204:~$ docker stop motioneye && docker rm motioneye motioneye motioneye

The Torizon architecture uses OSTree for the base operating system image, and Docker for the application stack. You can read a more thorough description of this architecture here. The next step in developing our security monitor is to create a docker-compose file for the motionEye launch. Let's create a file called motionEye.yml with the following contents. This maps directly to the command line that we used previously to manually run motionEye.

version: '2.4'

services:

motioneye:

image: ccrisan/motioneye:master-armhf

ports:

- 8765:8765/tcp

volumes:

- motioneye-etc:/etc/motioneye

- /etc/localtime:/etc/localtime:ro

- motioneye-varlib:/var/lib/motioneye

volumes:

motioneye-etc: {}

motioneye-varlib: {}

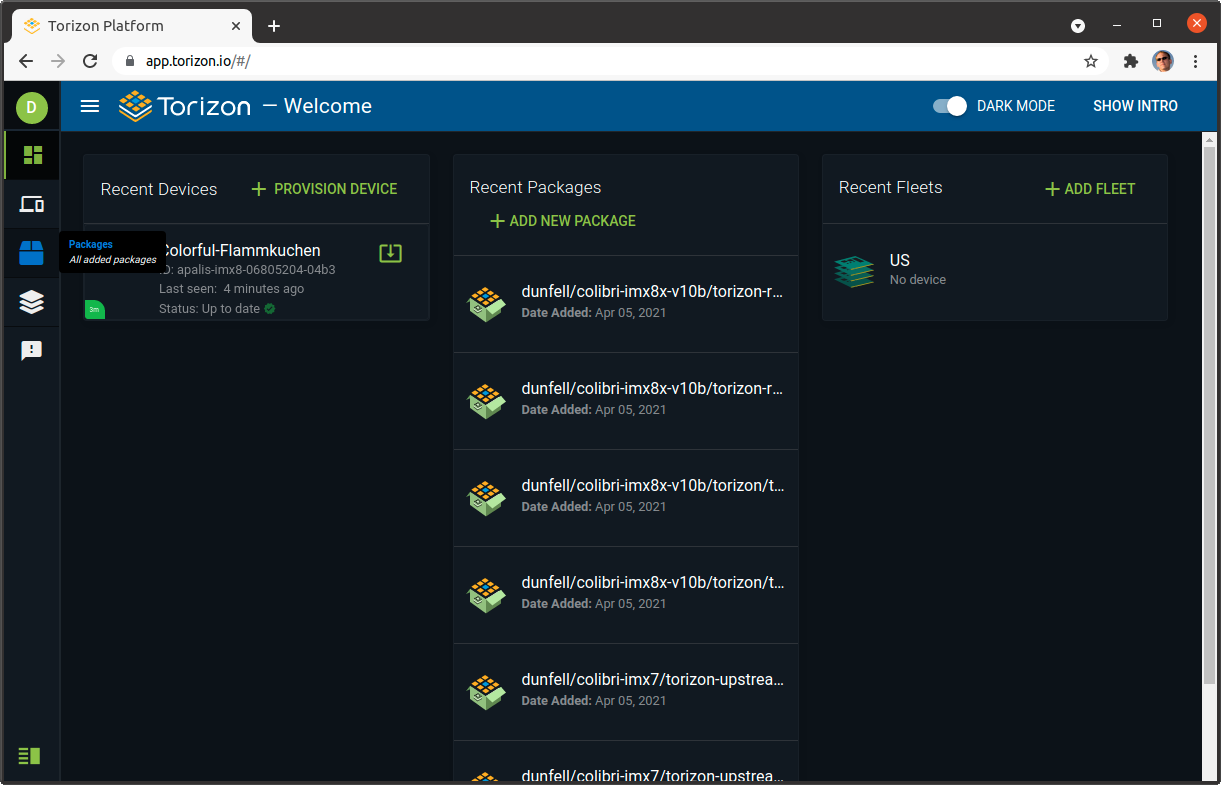

Now select the packages list from the sidebar as shown in figure 8.

Now click the + ADD NEW PACKAGE link and follow the prompts to upload the motionEye.yml file. Once the package has been created on the server, you can return to the dashboard and click the link to initiate an update to your chosen device.

Make sure Custom Packages is selected then use the Select package dropdown and select your motionEye.yml package. There should only be one version available. Click Continue to start the OTA deployment of your application stack. In a few minutes, you should be able to connect to the motionEye web user interface and configure your cameras.

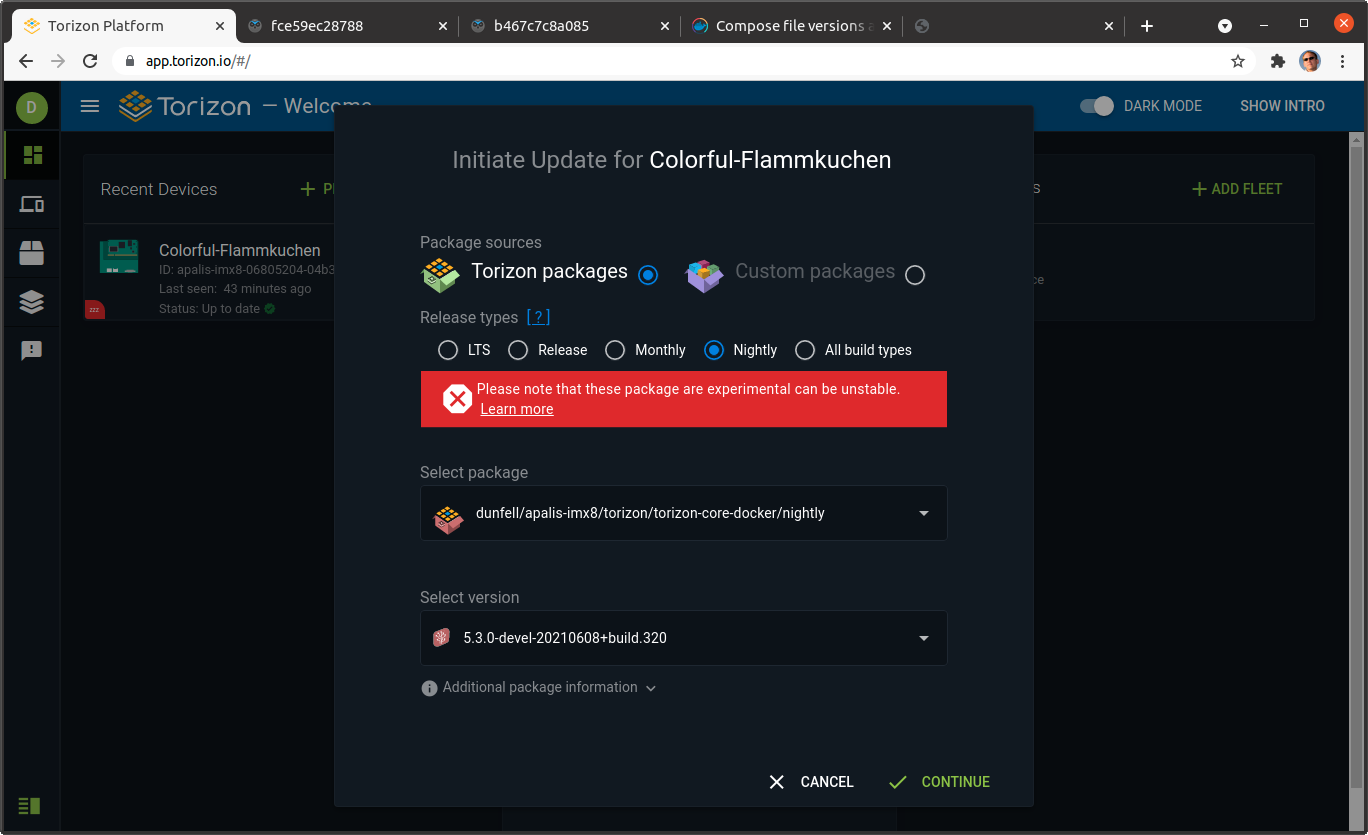

TorizonCore keeps the container runtime data store completely separate from the OSTree repository information used to store the base operating system. This means that we can update both applications and operating system independently. Let's test this by upgrading to a nightly build of TorizonCore. Note that these builds are not fully vetted for production use and we only recommend you use them for testing specific issues or prototyping as we are doing here.

First, from a shell prompt on your device, let's determine the existing TorizonCore version. Listing 8 shows that we are currently running version 5.1.0+build.1. Yours, of course, may be different.

apalis-imx8-06805204:~$ cat /etc/os-release ID=torizon NAME="TorizonCore" VERSION="5.1.0+build.1 (dunfell)" VERSION_ID=5.1.0-build.1 PRETTY_NAME="TorizonCore 5.1.0+build.1 (dunfell)" BUILD_ID="1" ANSI_COLOR="1;34"

To update to the latest nightly build, navigate to the Torizon platform web dashboard, and select the link to initiate an update. This time, select the Torizon packages, Nightly release type, and the dunfell/apalis-imx8/torizon/torizon-core-docker/nightly package (or other appropriate package for your chosen hardware). Now select the latest version available, in my case it is 5.3.0-devel-20210608+build.320. Select the CONTINUE button to start the deployment.

After a few minutes, your system will reboot and you can review the /etc/os-release file again to ensure that the OS version has been updated. You can also verify that the motionEye container is still running, having properly persisted across an OS update.

apalis-imx8-06805204:~$ cat /etc/os-release ID=torizon NAME="TorizonCore" VERSION="5.3.0-devel-20210608+build.320 (dunfell)" VERSION_ID=5.3.0-devel-20210608-build.320 PRETTY_NAME="TorizonCore 5.3.0-devel-20210608+build.320 (dunfell)" BUILD_ID="320" ANSI_COLOR="1;34" VARIANT="Docker" apalis-imx8-06805204:~$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 0f2a243c9951 ccrisan/motioneye:master-armhf "/bin/sh -c 'test -e…" 45 seconds ago Up 42 seconds 0.0.0.0:8765->8765/tcp torizon_motioneye_1

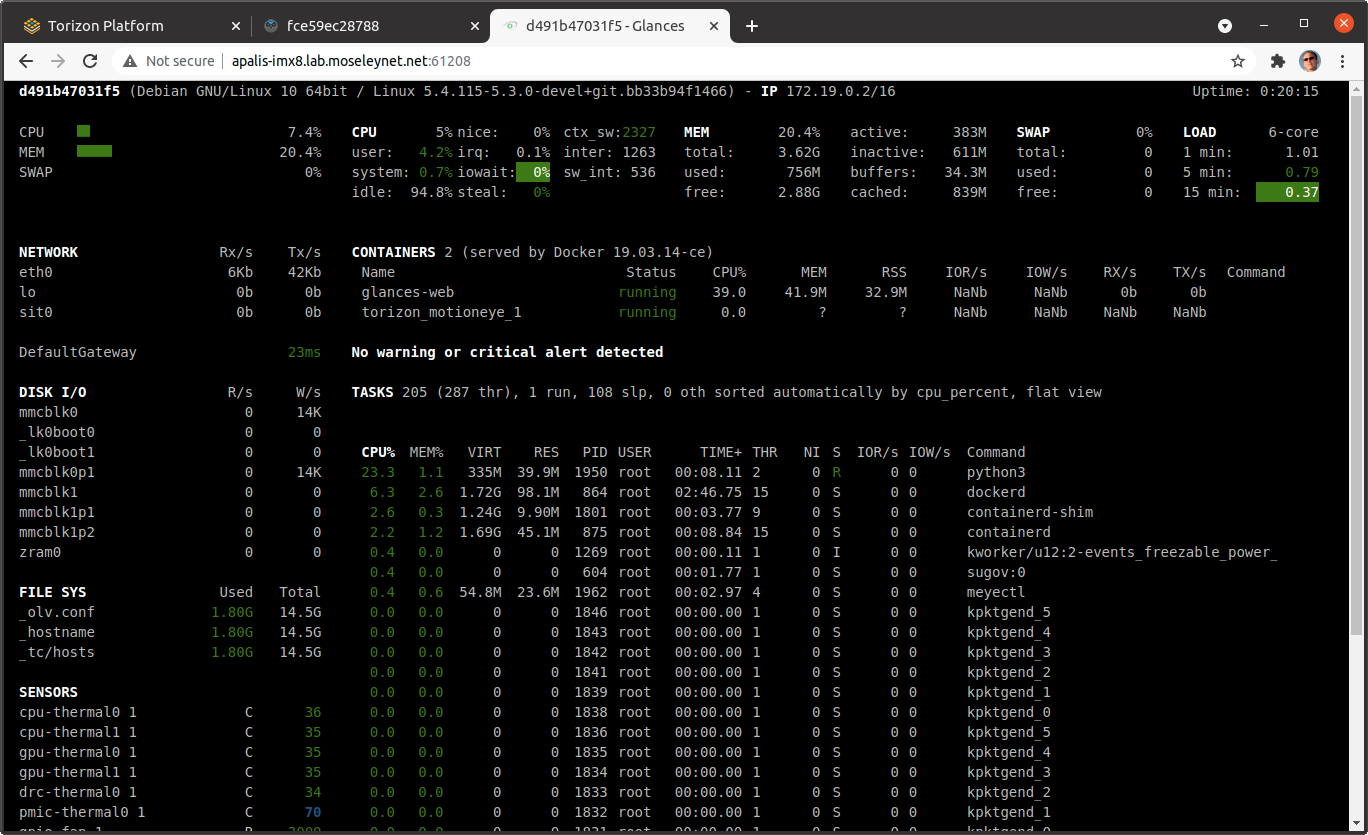

Now, let's wrap up by making a slightly more complicated multi-container scenario. Normally, you would use multiple containers to implement different services of a complete application but in this case, we will just add an independent container to demonstrate the setup. We will add a container running Glances so that you can see system utilization on your board over a web interface. This container can be added to your motionEye.yml file by adding the following contents into the services section (ie before the volumes specification.)

glances-web:

image: nicolargo/glances:dev

container_name: glances-web

environment:

- GLANCES_OPT=-w

volumes:

- /var/run/docker.sock:/var/run/docker.sock:ro

ports:

- 61208-61209:61208-61209

pid: "host"

Follow the steps from above where you initially uploaded your package, and in a few minutes, you should see something like figure 11 on port 61208 of your device.

This post covered - You can find out more about the Torizon - here. It is important to note that we only covered the basics of using the Torizon Platform for over-the-air updates. There are many other considerations needed when using this (or any) system for a production system. Notably, we did not discuss security in this post and it should be obvious that the services we installed display potentially sensitive information over web-based interfaces; if you plan to implement such a system you will need to investigate the security of these interfaces and make sure your users are properly protected.

Another important consideration is production provisioning of your devices. Most of the configuration changes we made in this post required manual steps on each device. That works well in a development environment or for a small scale prototype but quickly becomes burdensome as the size of your fleet grows. Torizon does provide tooling (called torizoncore-builder) for creating customized images, including such things as Wi-Fi credentials, bundled containers, and custom kernel drivers. This can be used to create an image with all of your configuration baked in so that your manufacturing team only needs to flash your custom image for the systems to be usable. You will still need a mechanism to handle unique components such as serial numbers but that can obviously not be baked into the image that is flashed onto multiple devices.

Finally, you will likely want some means to store your custom configuration data in a source code management system such as git. The torizoncore-builder tool has a subcommand called build which is designed specifically for this. You store your entire configuration as a series of build steps, controlled by a single configuration file. This configuration file and other files that are referenced by it can easily be stored in a git repository.

We hope you like Torizon and that it is useful in solving your device system needs.

Drew Moseley, Technical Solutions Architect, Toradex

Drew Moseley, Technical Solutions Architect, Toradex